7 Reporting and Results

Chapter 7 of the Dynamic Learning Maps® (DLM®) Alternate Assessment System 2021–2022 Technical Manual—Year-End Model (Dynamic Learning Maps Consortium, 2022) provides an overview of data files and score reports delivered to state education agencies and describes assessment results for the 2021–2022 academic year.

This chapter presents 2024–2025 student participation data; the percentage of students achieving at each performance level; and subgroup performance by gender, race, ethnicity, and English learner status. This chapter also reports the distribution of students by the highest linkage level mastered during spring 2025.

For a complete description of score reports and interpretive guides, see Chapter 7 of the 2021–2022 Technical Manual—Year-End Model (Dynamic Learning Maps Consortium, 2022).

7.1 Student Participation

During spring 2025, assessments were administered to 89,596 students in 15 states adopting the Year-End model. Table 7.1 displays counts of students tested in each state. The assessments were administered by 23,506 educators in 12,190 schools and 3,825 school districts. A total of 1,450,232 test sessions were administered during the spring assessment window. One test session is one testlet taken by one student. Only test sessions that were complete at the close of the spring assessment window counted toward the total sessions.

| State | Students (n) |

|---|---|

| Alaska | 472 |

| Colorado | 3,808 |

| Illinois | 14,384 |

| Maryland | 5,402 |

| New Hampshire | 682 |

| New Jersey | 12,040 |

| New Mexico | 1,980 |

| New York | 18,401 |

| Oklahoma | 4,845 |

| Palau | 23 |

| Pennsylvania | 16,195 |

| Rhode Island | 869 |

| Utah | 4,289 |

| West Virginia | 1,479 |

| Wisconsin | 4,727 |

Table 7.2 summarizes the number of students assessed in each grade. In Grades 3–8, more than 11,470 students participated in the DLM assessment at each grade. In high school, the largest number of students participated in Grade 11, and the smallest number participated in Grade 12. The differences in high school grade-level participation can be traced to differing state-level policies about the grade(s) in which students are assessed.

| Grade | Students (n) |

|---|---|

| 3 | 12,760 |

| 4 | 12,054 |

| 5 | 12,027 |

| 6 | 11,888 |

| 7 | 11,822 |

| 8 | 11,478 |

| 9 | 5,734 |

| 10 | 3,049 |

| 11 | 8,619 |

| 12 | 165 |

Table 7.3 summarizes the demographic characteristics of the students who participated in the spring 2025 administration. The majority of participants were male (68%), White (51%), and non-Hispanic (74%). About 7% of students were monitored or eligible for English learning services.

| Subgroup | n | % |

|---|---|---|

| Gender | ||

| Male | 61,223 | 68.3 |

| Female | 28,313 | 31.6 |

| Nonbinary/undesignated | 59 | 0.1 |

| Prefer Not to Say | 1 | <0.1 |

| Race | ||

| White | 46,136 | 51.5 |

| African American | 19,127 | 21.3 |

| Two or more races | 15,774 | 17.6 |

| Asian | 6,047 | 6.7 |

| American Indian | 1,900 | 2.1 |

| Native Hawaiian or Pacific Islander | 472 | 0.5 |

| Alaska Native | 140 | 0.2 |

| Hispanic ethnicity | ||

| Non-Hispanic | 65,899 | 73.6 |

| Hispanic | 23,697 | 26.4 |

| English learning (EL) participation | ||

| Not EL eligible or monitored | 83,317 | 93.0 |

| EL eligible or monitored | 6,279 | 7.0 |

In addition to the spring assessment window, instructionally embedded assessments are also made available for educators to optionally administer to students during the year. Results from the instructionally embedded assessments do not contribute to final summative scoring but can be used to guide instructional decision-making. Table 7.4 summarizes the number of students who completed at least one instructionally embedded assessment by state. State education agencies are allowed to set their own policies regarding requirements for participation in the instructionally embedded window. A total of 1,938 students in 10 states took at least one instructionally embedded testlet during the 2024–2025 academic year.

| State | n |

|---|---|

| Colorado | 16 |

| Maryland | 108 |

| New Jersey | 897 |

| New Mexico | 4 |

| New York | 76 |

| Oklahoma | 372 |

| Pennsylvania | 27 |

| Utah | 425 |

| West Virginia | 4 |

| Wisconsin | 9 |

Table 7.5 summarizes the number of instructionally embedded testlets taken in ELA and mathematics. Across all states, students took 11,701 ELA testlets and 11,342 mathematics testlets during the instructionally embedded window.

| Grade | English language arts | Mathematics |

|---|---|---|

| 3 | 1,460 | 1,302 |

| 4 | 1,303 | 1,209 |

| 5 | 1,514 | 1,449 |

| 6 | 1,684 | 1,491 |

| 7 | 1,560 | 1,484 |

| 8 | 1,623 | 1,553 |

| 9 | 283 | 331 |

| 10 | 214 | 197 |

| 11 | 1,977 | 2,239 |

| 12 | 83 | 87 |

| Total | 11,701 | 11,342 |

7.2 Student Performance

Student performance on DLM assessments is interpreted using cut points determined by a standard setting study. For a description of the standard setting process used to determine the cut points, see Chapter 6 of this manual. Student achievement is described using four performance levels. A student’s performance level is determined by the total number of linkage levels mastered across the assessed Essential Elements (EEs).

For the spring 2025 administration, student performance was reported using four performance levels:

- The student demonstrates Emerging understanding of and ability to apply content knowledge and skills represented by the EEs.

- The student’s understanding of and ability to apply targeted content knowledge and skills represented by the EEs is Approaching the Target.

- The student’s understanding of and ability to apply content knowledge and skills represented by the EEs is At Target. This performance level is considered meeting achievement expectations.

- The student demonstrates Advanced understanding of and ability to apply targeted content knowledge and skills represented by the EEs.

7.2.1 Overall Performance

Table 7.6 reports the percentage of students achieving at each performance level on the spring 2025 ELA and mathematics assessment by grade and subject. In ELA, the percentage of students who achieved at the At Target or Advanced levels (i.e., proficient) ranged from approximately 12% to 29%. In mathematics, the percentage of students who achieved at the At Target or Advanced levels ranged from approximately 9% to 33%.

| Grade | n | Emerging (%) | Approaching (%) | At Target (%) | Advanced (%) | At Target + Advanced (%) |

|---|---|---|---|---|---|---|

| English language arts | ||||||

| 3 | 12,742 | 65.2 | 16.8 | 16.9 | 1.1 | 18.0 |

| 4 | 12,044 | 67.7 | 20.0 | 11.4 | 1.0 | 12.3 |

| 5 | 12,014 | 57.2 | 16.9 | 22.7 | 3.1 | 25.8 |

| 6 | 11,865 | 55.0 | 21.5 | 17.7 | 5.8 | 23.5 |

| 7 | 11,792 | 45.5 | 28.1 | 21.1 | 5.3 | 26.4 |

| 8 | 11,450 | 44.1 | 27.9 | 25.2 | 2.8 | 28.0 |

| 9 | 5,728 | 36.4 | 34.4 | 22.2 | 7.0 | 29.2 |

| 10 | 3,043 | 38.0 | 37.2 | 23.0 | 1.8 | 24.8 |

| 11 | 8,587 | 38.7 | 32.9 | 23.2 | 5.3 | 28.4 |

| 12 | 159 | 40.9 | 34.0 | 22.6 | 2.5 | 25.2 |

| Mathematics | ||||||

| 3 | 12,729 | 61.4 | 12.7 | 19.1 | 6.9 | 25.9 |

| 4 | 12,024 | 58.1 | 9.2 | 25.1 | 7.6 | 32.7 |

| 5 | 11,999 | 53.5 | 21.9 | 11.5 | 13.2 | 24.7 |

| 6 | 11,855 | 68.2 | 16.6 | 8.0 | 7.2 | 15.2 |

| 7 | 11,775 | 60.2 | 22.9 | 10.8 | 6.1 | 16.9 |

| 8 | 11,443 | 60.2 | 30.3 | 6.9 | 2.5 | 9.4 |

| 9 | 5,712 | 43.8 | 37.8 | 14.6 | 3.7 | 18.3 |

| 10 | 3,041 | 57.5 | 31.6 | 10.2 | 0.7 | 10.9 |

| 11 | 8,568 | 46.1 | 27.9 | 24.6 | 1.4 | 26.0 |

| 12 | 163 | 47.9 | 27.6 | 23.9 | 0.6 | 24.5 |

7.2.2 Subgroup Performance

Data collection for DLM assessments includes demographic data on gender, race, ethnicity, and English learning status. Table 7.7 and Table 7.8 summarize the disaggregated frequency distributions for ELA and mathematics performance levels, respectively, collapsed across all assessed grade levels. Although state education agencies each have their own rules for minimum student counts needed to support public reporting of results, small counts are not suppressed here because results are aggregated across states and individual students cannot be identified.

|

Emerging

|

Approaching

|

At Target

|

Advanced

|

At Target +

Advanced |

||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Subgroup | n | % | n | % | n | % | n | % | n | % |

| Gender | ||||||||||

| Male | 32,431 | 53.1 | 14,566 | 23.8 | 11,970 | 19.6 | 2,138 | 3.5 | 14,108 | 23.1 |

| Female | 14,447 | 51.1 | 7,049 | 24.9 | 5,745 | 20.3 | 1,018 | 3.6 | 6,763 | 23.9 |

| Nonbinary/undesignated | 24 | 40.7 | 19 | 32.2 | 14 | 23.7 | 2 | 3.4 | 16 | 27.1 |

| Prefer Not to Say | 1 | >99.9 | 0 | 0.0 | 0 | 0.0 | 0 | 0.0 | 0 | 0.0 |

| Race | ||||||||||

| White | 23,934 | 52.0 | 10,851 | 23.6 | 9,469 | 20.6 | 1,795 | 3.9 | 11,264 | 24.5 |

| African American | 9,626 | 50.4 | 4,912 | 25.7 | 3,889 | 20.4 | 658 | 3.4 | 4,547 | 23.8 |

| Two or more races | 8,388 | 53.3 | 3,898 | 24.8 | 2,996 | 19.0 | 463 | 2.9 | 3,459 | 22.0 |

| Asian | 3,730 | 61.8 | 1,303 | 21.6 | 864 | 14.3 | 138 | 2.3 | 1,002 | 16.6 |

| American Indian | 906 | 47.7 | 508 | 26.8 | 404 | 21.3 | 80 | 4.2 | 484 | 25.5 |

| Native Hawaiian or Pacific Islander | 242 | 51.3 | 131 | 27.8 | 78 | 16.5 | 21 | 4.4 | 99 | 21.0 |

| Alaska Native | 77 | 55.0 | 31 | 22.1 | 29 | 20.7 | 3 | 2.1 | 32 | 22.9 |

| Hispanic ethnicity | ||||||||||

| Non-Hispanic | 34,486 | 52.4 | 15,854 | 24.1 | 13,150 | 20.0 | 2,288 | 3.5 | 15,438 | 23.5 |

| Hispanic | 12,417 | 52.5 | 5,780 | 24.4 | 4,579 | 19.4 | 870 | 3.7 | 5,449 | 23.0 |

| English learning (EL) participation | ||||||||||

| Not EL eligible or monitored | 43,511 | 52.3 | 20,081 | 24.1 | 16,595 | 20.0 | 2,971 | 3.6 | 19,566 | 23.5 |

| EL eligible or monitored | 3,392 | 54.1 | 1,553 | 24.8 | 1,134 | 18.1 | 187 | 3.0 | 1,321 | 21.1 |

|

Emerging

|

Approaching

|

At Target

|

Advanced

|

At Target +

Advanced |

||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Subgroup | n | % | n | % | n | % | n | % | n | % |

| Gender | ||||||||||

| Male | 34,715 | 56.9 | 12,712 | 20.8 | 9,412 | 15.4 | 4,179 | 6.8 | 13,591 | 22.3 |

| Female | 16,805 | 59.5 | 6,316 | 22.4 | 3,716 | 13.2 | 1,394 | 4.9 | 5,110 | 18.1 |

| Nonbinary/undesignated | 30 | 50.8 | 18 | 30.5 | 10 | 16.9 | 1 | 1.7 | 11 | 18.6 |

| Prefer Not to Say | 1 | >99.9 | 0 | 0.0 | 0 | 0.0 | 0 | 0.0 | 0 | 0.0 |

| Race | ||||||||||

| White | 26,542 | 57.7 | 10,046 | 21.8 | 6,665 | 14.5 | 2,744 | 6.0 | 9,409 | 20.5 |

| African American | 10,723 | 56.3 | 4,109 | 21.6 | 2,924 | 15.3 | 1,301 | 6.8 | 4,225 | 22.2 |

| Two or more races | 9,120 | 58.0 | 3,341 | 21.2 | 2,327 | 14.8 | 949 | 6.0 | 3,276 | 20.8 |

| Asian | 3,848 | 64.0 | 963 | 16.0 | 818 | 13.6 | 384 | 6.4 | 1,202 | 20.0 |

| American Indian | 969 | 51.1 | 447 | 23.6 | 323 | 17.0 | 157 | 8.3 | 480 | 25.3 |

| Native Hawaiian or Pacific Islander | 266 | 56.6 | 106 | 22.6 | 67 | 14.3 | 31 | 6.6 | 98 | 20.9 |

| Alaska Native | 83 | 59.7 | 34 | 24.5 | 14 | 10.1 | 8 | 5.8 | 22 | 15.8 |

| Hispanic ethnicity | ||||||||||

| Non-Hispanic | 38,136 | 58.1 | 14,066 | 21.4 | 9,561 | 14.6 | 3,917 | 6.0 | 13,478 | 20.5 |

| Hispanic | 13,415 | 56.8 | 4,980 | 21.1 | 3,577 | 15.1 | 1,657 | 7.0 | 5,234 | 22.2 |

| English learning (EL) participation | ||||||||||

| Not EL eligible or monitored | 48,029 | 57.8 | 17,725 | 21.3 | 12,194 | 14.7 | 5,089 | 6.1 | 17,283 | 20.8 |

| EL eligible or monitored | 3,522 | 56.2 | 1,321 | 21.1 | 944 | 15.1 | 485 | 7.7 | 1,429 | 22.8 |

7.3 Mastery Results

As previously described, student performance levels are determined by applying cut points to the total number of linkage levels mastered in each subject. This section summarizes student mastery of assessed EEs and linkage levels, including how students demonstrated mastery from among three scoring rules and the highest linkage level students tended to master.

7.3.1 Mastery Status Assignment

As described in Chapter 5 of the 2021–2022 Technical Manual—Year-End Model (Dynamic Learning Maps Consortium, 2022), student responses to assessment items are used to estimate the posterior probability that the student mastered each of the assessed linkage levels using diagnostic classification modeling. The linkage levels, in order, are Initial Precursor, Distal Precursor, Proximal Precursor, Target, and Successor. A student can be a master of zero, one, two, three, four, or all five linkage levels within the order constraints. For example, if a student masters the Proximal Precursor level, they also master all linkage levels lower in the order (i.e., Initial Precursor and Distal Precursor). Students with a posterior probability of mastery greater than or equal to .80 are assigned a linkage level mastery status of 1, or mastered. Students with a posterior probability of mastery less than .80 are assigned a linkage level mastery status of 0, or not mastered. Maximum uncertainty in the mastery status occurs when the probability is .5, and maximum certainty occurs when the probability approaches 0 or 1.

In addition to the calculated probability of mastery, students could be assigned mastery of linkage levels within an EE in two other ways: correctly answering 80% of all items administered at the linkage level or through the two-down scoring rule. The two-down scoring rule was implemented to guard against students assessed at the highest linkage levels being overly penalized for incorrect responses. When a student does not demonstrate mastery of the assessed linkage level, mastery is assigned at two linkage levels below the level that was assessed. The two-down rule is based on linkage level ordering evidence and the underlying learning map structure, which is presented in Chapter 2 of the 2021–2022 Technical Manual—Year-End Model (Dynamic Learning Maps Consortium, 2022).

As an example of the two-down scoring rule, take a student who tested only on the Target linkage level of an EE. If the student demonstrated mastery of the Target linkage level, as defined by the .80 posterior probability of mastery cutoff or the 80% correct rule, then all linkage levels below and including the Target level would be categorized as mastered. If the student did not demonstrate mastery on the tested Target linkage level, then mastery would be assigned at two linkage levels below the tested linkage level (i.e., mastery of the Distal Precursor), rather than showing no evidence of EE mastery at all.

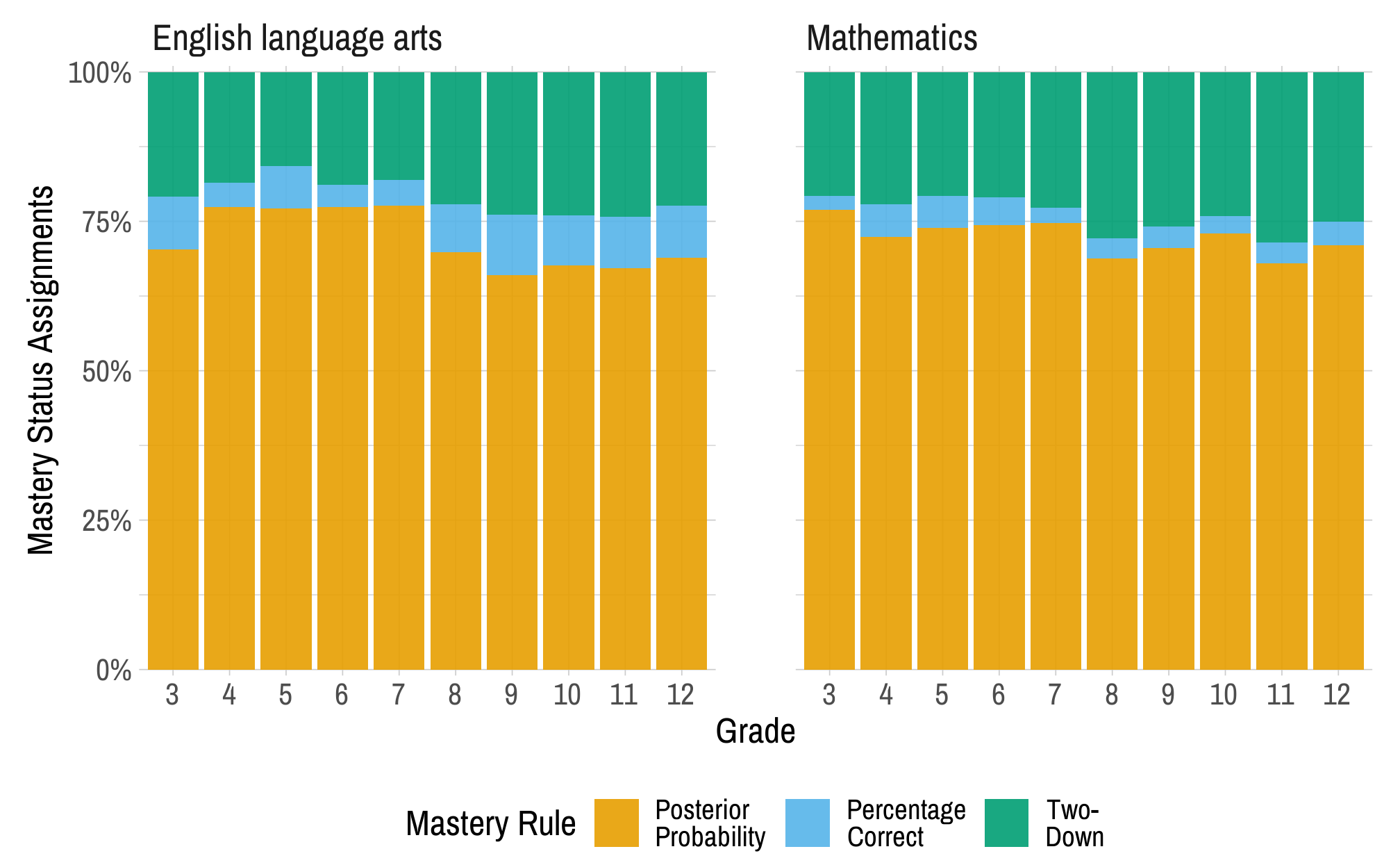

The percentage of mastery statuses obtained by each scoring rule was calculated to evaluate how each mastery assignment rule contributed to students’ linkage level mastery statuses during the 2024–2025 administration of DLM assessments (see Figure 7.1). Posterior probability was given first priority. That is, if scoring rules agreed on the highest linkage level mastered within an EE (i.e., the posterior probability and 80% correct rule both indicate the Target linkage level as the highest mastered), the mastery status was counted as obtained via the posterior probability. If mastery was not demonstrated by meeting the posterior probability threshold, the 80% scoring rule was imposed, followed by the two-down rule. This means that EEs that were assessed by a student at the lowest two linkage levels (i.e., Initial Precursor and Distal Precursor) are never categorized as having mastery assigned by the two-down rule. This is because the student would either master the assessed linkage level and have the EE counted under the posterior probability or 80% correct scoring rule, or all three scoring rules would agree on the score (i.e., no evidence of mastery), in which case preference would be given to the posterior probability. Across grades and subjects, approximately 66%–78% of mastered linkage levels were derived from the posterior probability obtained from the modeling procedure. Approximately 2%–10% of linkage levels were assigned mastery status by the percentage correct rule. The remaining 16%–28% of mastered linkage levels were determined by the two-down rule.

Figure 7.1: Linkage Level Mastery Assignment by Mastery Rule for Each Subject and Grade

Because correct responses to all items measuring the linkage level are often necessary to achieve a posterior probability above the .80 threshold, the percentage correct rule overlaps considerably with the posterior probabilities (but is second in priority). The percentage correct rule did provide mastery status in instances where correctly responding to all or most items still resulted in a posterior probability below the mastery threshold. The agreement between the posterior probability and percentage correct rule was quantified by examining the rate of agreement between the highest linkage level mastered for each EE for each student using each method. For the 2024–2025 operational year, the rate of agreement between the two methods was 86%. When the two methods disagreed, the posterior probability method indicated a higher level of mastery (and therefore was implemented for scoring) in 59% of cases. Thus, in some instances, the posterior probabilities allowed students to demonstrate mastery when the percentage correct was lower than 80% (e.g., a student completed a four-item testlet and answered three of four items correctly).

7.3.2 Linkage Level Mastery

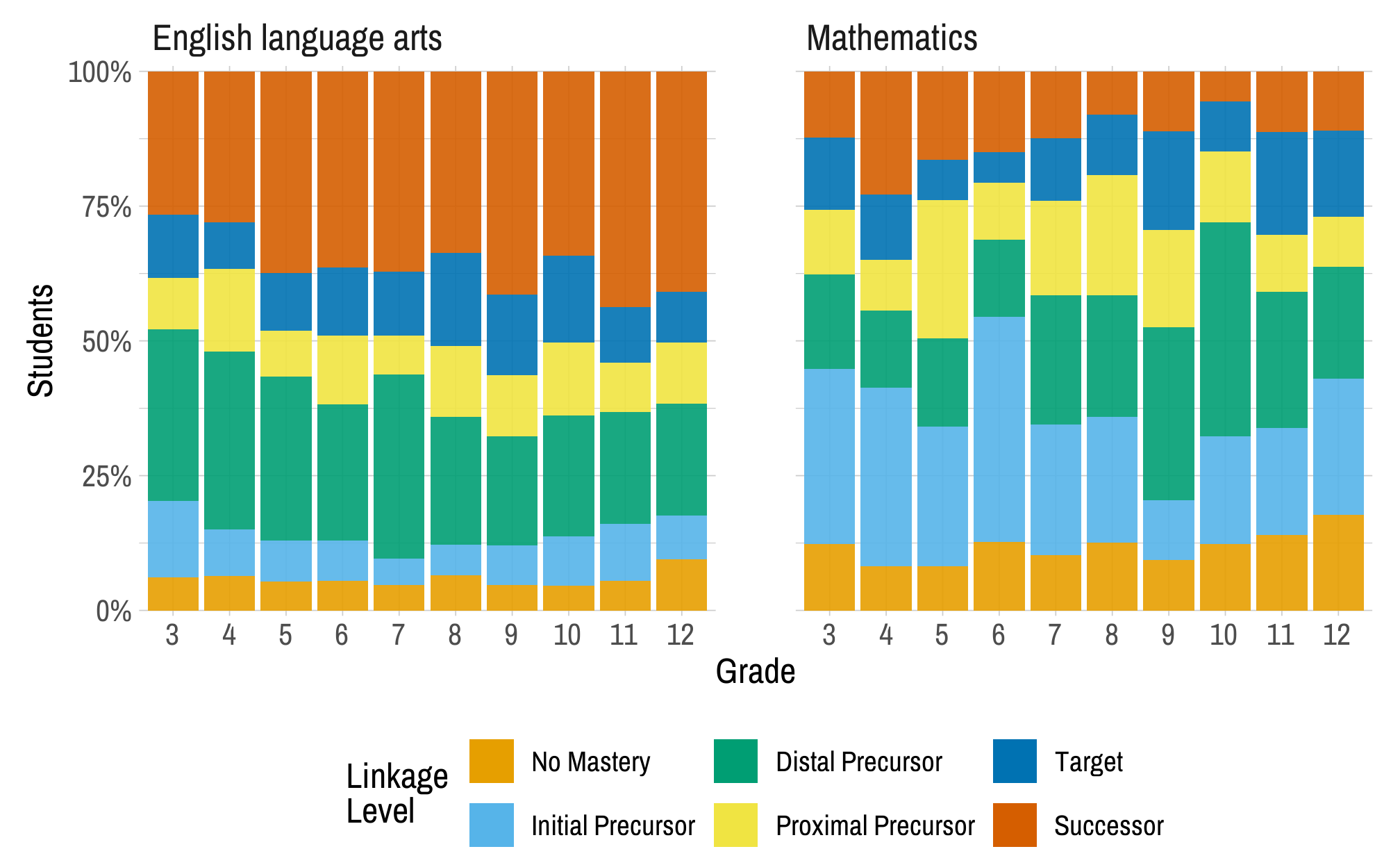

Scoring for DLM assessments determines the highest linkage level mastered for each EE. This section summarizes the distribution of students by highest linkage level mastered across all EEs. For each student, the highest linkage level mastered across all tested EEs was calculated. Then, for each grade, the number of students with each linkage level as their highest mastered linkage level across all EEs was summed and then divided by the total number of students who tested in the grade and subject. This resulted in the proportion of students for whom each level was the highest linkage level mastered.

Figure 7.2 displays the percentage of students who mastered each linkage level as the highest linkage level across all assessed EEs for ELA and mathematics. For example, across all Grade 3 mathematics EEs, the Initial Precursor level was the highest level that 32% of students mastered. The percentage of students who mastered the Target or Successor linkage level as their highest level ranged from approximately 37% to 56% in ELA and from approximately 15% to 35% in mathematics.

Figure 7.2: Students’ Highest Linkage Level Mastered Across English Language Arts and Mathematics Essential Elements by Grade in 2024–2025

7.4 Data Files

DLM assessment results were made available to DLM state education agencies following the spring 2025 administration. Similar to previous years, the General Research File (GRF) contained student results, including each student’s highest linkage level mastered for each EE and final performance level for the subject for all students who completed any testlets. In addition to the GRF, the states received several supplemental files. Consistent with previous years, the special circumstances file provided information about which students and EEs were affected by extenuating circumstances (e.g., chronic absences), as defined by each state. State education agencies also received a supplemental file to identify exited students. The exited students file included all students who exited at any point during the academic year. In the event of observed incidents during assessment delivery, state education agencies are provided with an incident file describing students affected; however, no incidents occurred during 2024–2025.

Consistent with previous delivery cycles, state education agencies were provided with a 2-week window following data file delivery to review the files and invalidate student records in the GRF. Decisions about whether to invalidate student records are informed by individual state policy. If changes were made to the GRF, state education agencies submitted final GRFs via Educator Portal. The final GRF was used to generate score reports.

7.5 Score Reports

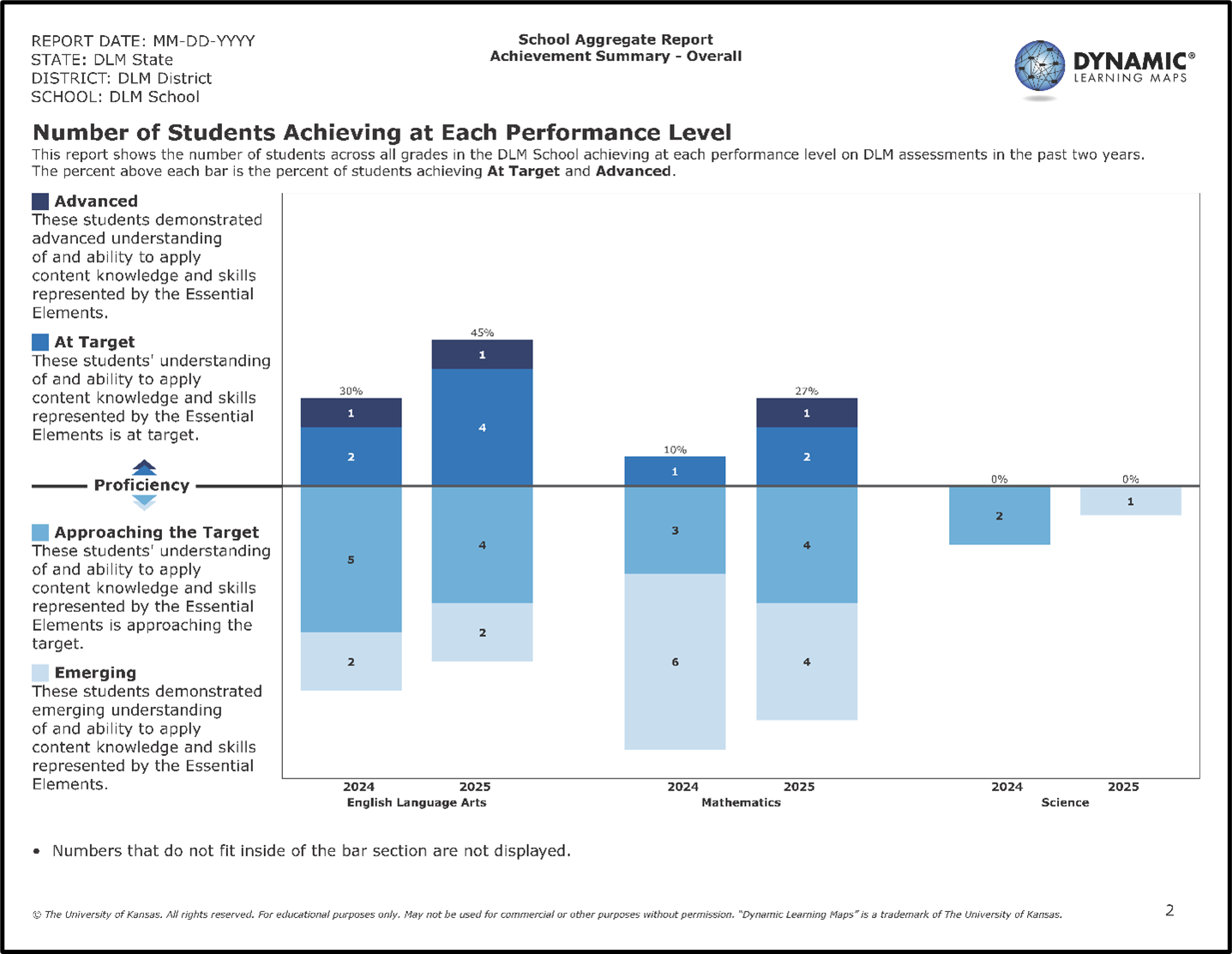

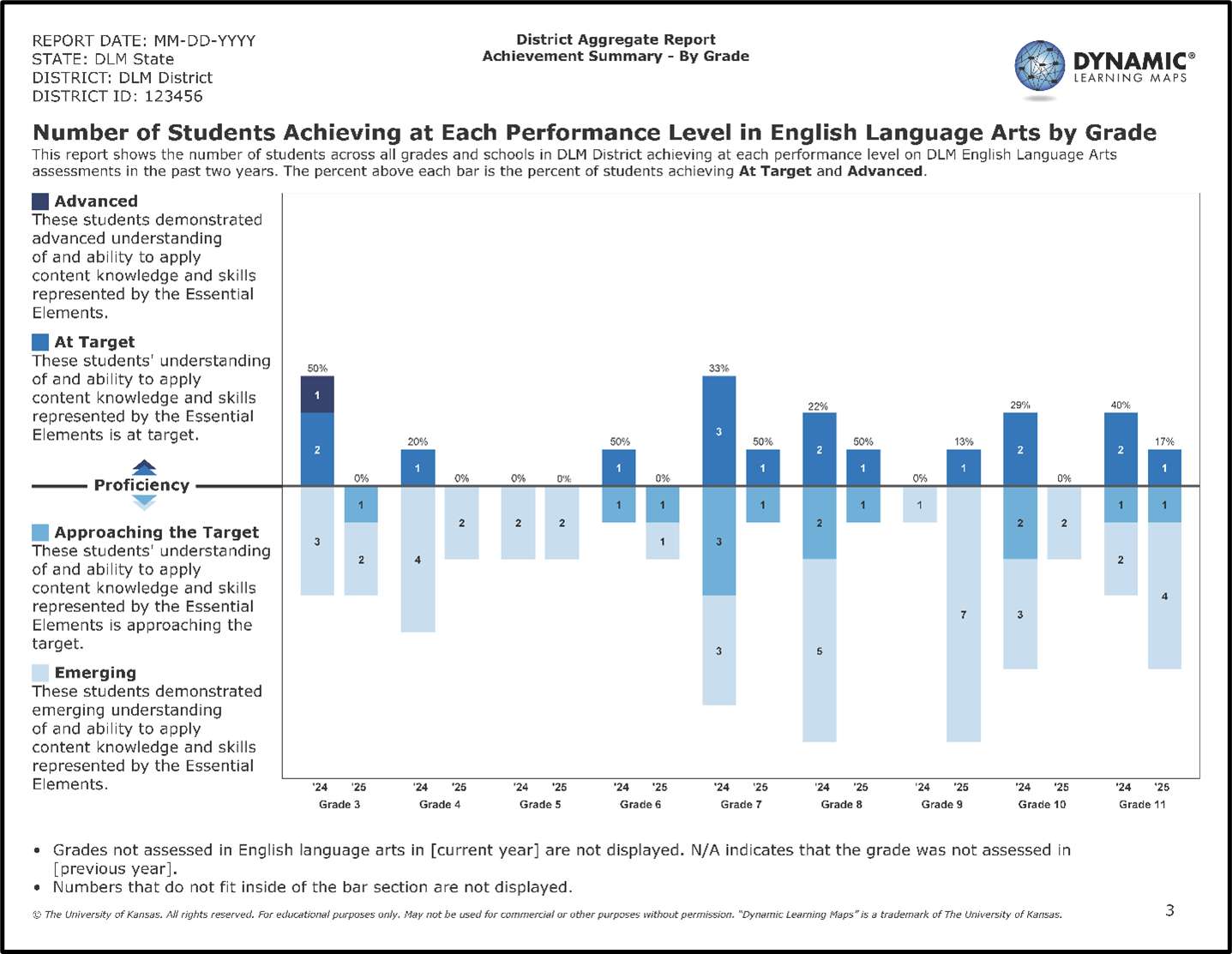

Assessment results were provided to state education agencies to distribute to parents/guardians, educators, and local education agencies. Individual Student Score Reports summarized student performance on the assessment by subject. Several aggregated reports were provided to state and local education agencies, including reports for the classroom, school, district, and state.

While no changes were made to the structure of Individual Student Score Reports during spring 2025, the School and District Aggregate Reports underwent a full redesign. This redesign aimed to improve clarity and usability. For a complete description of all score reports, see Chapter 7 of the 2021–2022 Technical Manual—Year-End Model (Dynamic Learning Maps Consortium, 2022).

7.5.1 Individual Student Score Reports

Similar to previous years, Individual Student Score Reports included two sections: a Performance Profile, which describes student performance in the subject overall, and a Learning Profile, which provides detailed reporting of student mastery of individual skills. During 2024–2025, existing helplet videos continued to be available to support interpretation of score reports. Further information on evidence related to the development, interpretation, and use of Individual Student Score Reports and sample pages of the Performance Profile and Learning Profile can be found in Chapter 7 of the 2021–2022 Technical Manual—Year-End Model (Dynamic Learning Maps Consortium, 2022).

7.5.1.1 Parent Feedback on DLM Score Reports

Parents are critical stakeholders and users of DLM assessment results and score reports. We previously collected parent feedback on alternate assessment reporting (Nitsch, 2013) and on teachers’ interpretation and use of DLM score reports (e.g., Clark et al., 2023); however we had not yet collected parent feedback on DLM score reports. To address this gap, we conducted a study to collect feedback on DLM score reports from parents of children who take DLM assessments.

To recruit participants, we worked with parent centers in three states to help us identify and reach parents and guardians in their state who had a child (or children) who take DLM assessments. We conducted virtual (video or phone) interviews with 21 parents or guardians. Due to scheduling constraints, most feedback sessions were conducted individually, but several had two participants attending. During the interviews, participants referred to an example DLM score report that we provided that showed ELA Grade 5 assessment results for a hypothetical student. The interviewers followed a semi-structured protocol to elicit parents’ perceptions of the interpretability and usability of the score report. Interviews were coded and analyzed to identify themes around interpretation and use.

Participants generally found the mastery-based score report to be well designed, interpretable, and useful, with several strengths and some areas for improvement. Design features, including clear organization, logical ordering of information, use of visual and textual elements, and color and language choices, supported participants’ understanding of mastery results. Participants valued the emphasis on students’ strengths rather than deficits. Most participants correctly interpreted performance levels and skill-level mastery, using the Learning Profile to identify mastered skills and to understand progression toward grade-level targets; however, some experienced confusion connecting information across reporting levels (i.e., across sections for overall performance, mastery by Area, and Essential Element mastery), particularly due to unfamiliar codes and unfamiliar nesting of reporting levels. Language was generally viewed as positive and accessible, though some participants found certain descriptors and technical terms overly complex or insufficiently explained, suggesting a need for simplification, examples, or definitions. Participants described a wide range of ways they could envision using the report, including understanding overall performance, tracking progress over time, guiding at-home learning, supporting conversations and advocacy with educators, informing instructional goals and next steps for learning, and sharing information with other service providers. Participants also noted potential barriers to use, such as information overload, discouragement resulting from low mastery results, or the report being set aside without guided discussion.

We used findings from the study to inform two changes to the score reports. We added a sentence in the Area results to help describe the connections between the Area summaries and individual skills reported in the Learning Profile. We also added labels for all mastery levels (e.g., Distal Precursor) on the Learning Profile to better support users’ understanding. These changes were implemented for the 2023–2024 school year. Other feedback from the study is being considered for future reporting improvements.

7.5.2 School and District Aggregate Score Reports

Aggregate score reports describe student achievement by subject at the class, school, district, and state levels. As part of DLM program continuous improvement, school and district aggregate reports were updated to better meet the needs of these user groups. Score report design was informed by cadres of school and district staff from DLM states, along with internal ATLAS staff, the DLM Technical Advisory Committee, and the DLM Governance Board. We first defined information needs for each group and then iteratively designed reports to meet those needs. The updated School and District Aggregate Reports use graphical formats to communicate the number and percentage of students who achieved at each performance level, for each subject. As with prior versions of these reports, the reports include data from any student who was assessed on at least one DLM testlet. The School Aggregate Report combines data across all students in the school. The District Aggregate Report combines data from all students in the district, providing the information overall and by grade. Both School and District Aggregate Reports include student performance for the current year and the previous year. Figures 7.3 and 7.4 display a sample page from each report.

Supplemental documentation, including interpretive guides, were updated to reflect the changes and describe intended interpretation and use. These resources are available on the DLM Scoring & Reporting Resources webpage. https://dynamiclearningmaps.org/srr/ye

Figure 7.3: Sample Page of the School Aggregate Report

Figure 7.4: Sample Page of the District Aggregate Report

7.6 Quality-Control Procedures for Data Files and Score Reports

No changes were made to the quality-control procedures for data files and score reports for 2024–2025. For a complete description of quality-control procedures, see Chapter 7 of the 2021–2022 Technical Manual—Year-End Model (Dynamic Learning Maps Consortium, 2022).

7.7 Conclusion

Results for DLM assessments include students’ overall performance levels and mastery decisions for each assessed EE and linkage level. During spring 2025, ELA and mathematics assessments were administered to 89,596 students in 15 states adopting the Year-End model. Between 9% and 33% of students achieved at the At Target or Advanced levels across all grades and subjects. Of the three scoring rules, linkage level mastery status was most frequently assigned by the posterior probability of mastery, and students tended to demonstrate mastery of the Target or Successor level at higher rates in ELA than in mathematics.

Following the spring 2025 administration, four data files were delivered to state education agencies: the GRF, the special circumstance code file, the exited students file, and an incident file. No changes were made to the structure of data files, individual student score reports, or quality-control procedures during 2024–2025. Analyses of linkage level assignment from fall to spring showed that most linkage levels remained stable across windows, though upward adjustments were more common than downward, often supporting mastery at higher levels. Parent feedback informed changes to the Individual Student Score Reports, including clarifying language and added mastery level labels in the Learning Profile. Additionally, School and District Aggregate Reports were redesigned based on stakeholder feedback to better meet user needs.